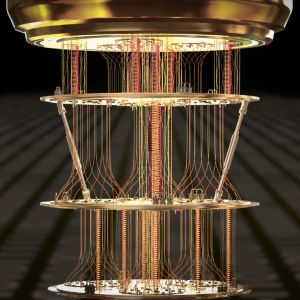

Tech companies in the United States are racing to scale quantum computer systems from lab prototypes to industrial machines, according to details shared by IBM, Google, Amazon, Microsoft, and others. Breakthroughs in chip design and error correction have narrowed technical gaps, putting a decade-long target within reach for some, while others warn the road will be far longer. IBM’s announcement in June laid out a full-scale design that fills missing engineering details from earlier plans. Jay Gambetta, who leads the company’s quantum program, said they now have a “clear path” to a machine that can outperform classical computers in tasks like materials simulation and AI modeling before 2030. Google’s quantum research team, led by Julian Kelly, removed one of its biggest technical barriers last year and says it will also deliver before the decade ends, with Kelly calling all remaining problems “surmountable.” Companies push to solve scaling challenges Amazon’s quantum hardware chief Oskar Painter warned that even with major physics milestones behind them, the industrial phase could take 15–30 years. The leap from fewer than 200 qubits — the basic quantum units — to more than one million is needed for meaningful performance. Scaling is hampered by qubit instability, which limits their useful state to fractions of a second. IBM’s Condor chip, at 433 qubits, showed interference between components, an issue Rigetti Computing CEO Subodh Kulkarni described as “a nasty physics problem.” IBM says it expected the issue and is now using a different coupler to reduce interference. Early systems relied on individually tuned qubits to improve performance, but that’s unworkable at large scale. Companies are now developing more reliable components and cheaper manufacturing methods. Google has a cost-reduction goal of cutting parts prices tenfold to build a full-scale system for $1 billion. Error correction, duplicating data across qubits so the loss of one doesn’t corrupt results, is seen as a requirement for scaling. Google is the only one to show a chip where error correction improves as systems grow. Kelly said skipping this step would lead to “a very expensive machine that outputs noise.” Competing designs and government backing IBM is betting on a different error correction method called low-density parity-check code, which it claims needs 90% fewer qubits than Google’s surface code approach. Surface code connects each qubit in a grid to its neighbors but requires more than one million qubits for useful work. IBM’s method requires long-distance connections between qubits, which are difficult to engineer. IBM says it has now achieved this, but analysts like Mark Horvath at Gartner say the design still only exists in theory and must be proven in manufacturing. Other technical hurdles remain: simplifying wiring, connecting multiple chips into modules, and building larger cryogenic fridges to keep systems near absolute zero. Superconducting qubits, used by IBM and Google, show strong progress but are difficult to control. Alternatives like trapped ions, neutral atoms, and photons are more stable but slower and harder to connect into large systems. Sebastian Weidt, CEO of UK-based Universal Quantum, says government funding decisions will likely narrow the field to a few contenders. Darpa, the Pentagon’s research agency, has launched a review to identify the fastest path to a practical system. Amazon and Microsoft are experimenting with new qubit designs, including exotic states of matter, while established players keep refining older technologies. “Just because it’s hard, doesn’t mean it can’t be done,” Horvath said , summing up the industry’s determination to reach the million-qubit mark. Want your project in front of crypto’s top minds? Feature it in our next industry report, where data meets impact.

![[LIVE] Crypto News Today: Latest Updates for August 13, 2025 – Ethereum Price Rallies Past $4.6K, Polymarket Sees 65% Odds of $5K This Month [LIVE] Crypto News Today: Latest Updates for August 13, 2025 – Ethereum Price Rallies Past $4.6K, Polymarket Sees 65% Odds of $5K This Month](https://resources.cryptocompare.com/news/52/50008985.jpeg)